CDR Tickets

| Issue Number | 4863 |

|---|---|

| Summary | Audio import spreadsheet error |

| Created | 2020-08-26 12:00:46 |

| Issue Type | Inquiry |

| Submitted By | Osei-Poku, William (NIH/NCI) [C] |

| Assigned To | Kline, Bob (NIH/NCI) [C] |

| Status | Closed |

| Resolved | 2020-09-14 13:30:50 |

| Resolution | Fixed |

| Path | /home/bkline/backups/jira/ocecdr/issue.273636 |

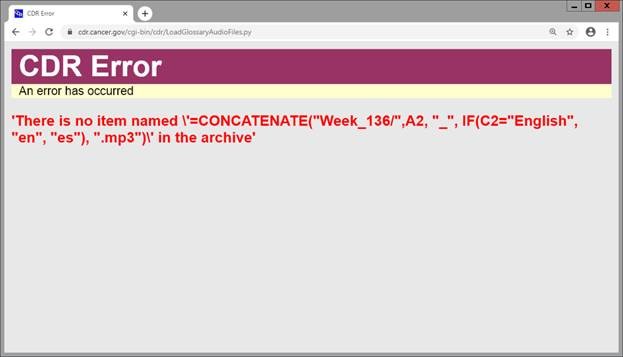

I ran into a problem importing the audio files for Week_136 (error message below). I looked at the spreadsheet and the filename column has the formula displayed in the error message. It seems to have been introduced when entering the filenames. Would the problem be fixed if I clean up the spreadsheet and replace it on the FTP server ?

Here's what I think I've been able to figure out. It looks like the use of formulas in that column's cells is not new to this set of audio files. It looks like the older spreadsheet software we were using, which is not capable of supporting modern Excel workbooks, was not preserving the formulas, but was instead returning the calculated values, so this issue didn't arise. However, when we rewrote the script to support reuse of media documents (OCECDR-4633), that older package was replaced by one which handles .xlsx files, and preserves the formulas entered into the cells. It would seem, since this issue didn't get caught during testing of Leibniz, that the test files used during that testing did not involve formulas in the filename cells, but instead had the actual string values for the media file paths (I know that was true for my own testing, as I was unaware that formulas were being entered instead of the actual file path strings).

So you have three options, I think.

Replace the existing spreadsheets and resubmit them, avoiding formulas in the filename cells for this batch as well as for future batches.

Have us modify the software to open the workbooks with a setting which discards the formulas, replacing them with their corresponding calculated values. We'd test the modification on the lower tiers, and we can try and fast-track the change if getting the current batches loaded is urgent.

Combine the first two options, replacing the formulas in the existing batch, but modify the script in the next release to allow them for future batches.

Let me know which option you would prefer.

Let's go with option 3.

With regards to replacing of the spreadsheet, do we have to resubmit all the files (renaming the files and reviewing them again) or we can just replace the spreadsheet while keeping everything else the same?

I think you should be able to just replace the spreadsheet.

It is not easy to get rid of the formulas without distorting the data so it looks like we have to go with option 2.

Please do some thorough testing on DEV, using workbooks which have formulas stored for the filename columns.

Would it be possible refresh both DEV and QA for testing this?

I have refreshed QA. I'll leave it to ~volker to work with you to find a time he can refresh DEV that will not disrupt his work on Maxwell (assuming that's possible).

Also, ~volker, can you look over the scheduled jobs on QA to make sure there aren't any more that need to be turned off. I disabled the Notify PDQ Data Partners job on QA. I also marked that job as PROD ONLY on CDR PROD so we'll have a reminder to turn that one off on the lower tiers whenever we do a refresh. Feel free to mark any others similarly on PROD as appropriate.

Hi, ~oseipokuw, I'm confirming that the workaround is on QA.

Thanks ~bkline

Please upload the attached file to the QA server for processing. Week_500.zip

Done (I assumed you meant the QA directory of the SFTP server, not the CDR QA server).

bq. Done (I assumed you meant the QA directory of the SFTP server, not the CDR QA server).

That is right. Sorry for the confusion.

Please upload this next batch (Rev1) to the same folder.

Done.

Thanks! There seems to be an error that is different from the one we are currently testing which prevents the generation of a second revision spreadsheet.

‘NoneType’ object has no attribute ‘group’

This happens when (after review is completed) you click on the submit button to generate a second sheet.

Fixed on DEV and QA.

Thanks! Please upload the attached file to the SFTP server.Week_501.zip

All set.

Thanks! Please upload the attached file to the same SFTP server. Week_501_Rev1.zip

Done.

Thanks! Please upload Rev2 to the SFTP server. Week_501_Rev2.zip

✔

We've complete review of Week 501 but they are not showing up on the audio import page to import. We also completed Week 500 but I think it will be better not to import those because that was the batch we couldn't create a Rev2 spreadsheet for. Below are the files I expected to see on the import page but I am not seeing them. It is rather showing a Week_700 file.

Week_501.zip Completed 2020-09-10 09:03:54

Week_501_Rev1.zip Completed 2020-09-10 12:20:09

Week_501_Rev2.zip Completed 2020-09-10 16:05:04

That's expected behavior as designed. The sets for the most recent week are the ones available for import. I have removed the sets from later "weeks" (it would have been less confusing, I think, if a numbering scheme had been adopted which actually represented the current week, as the publishing system does, using the ISO week-numbering system, instead of just an arbitrary number as we have now). Please try again.

Thanks! Could you please replace the attached file with the one already on the SFTP server? There was an error that prevented me from importing. I have fixed the error in the attached file. Week_501_Rev1.zip

Done.

Thanks! I continue to get the following error message "There is no item named 'Week_501_Rev1/800017_es.mp3' in the archive". I thought I had fixed it in the latest file I provided but it doesn't seem to be the case.

That's right. There's a Week_501_Rev1/800017_en.mp3 but

no Week_501_Rev1/800017_es.mp3.

Could you please remove the Week_501_Rev1.zip file from the list of imports? It doesn't look like there is an easy way of fixing this problem because I have renamed the file in the most recent file Week_501_Rev1.zip I provided but it is not able to overwrite the existing one when I try to download. When you remove it, I will modify the files and spreadsheet and provide you with another file to upload to either replace the Week_501_Rev1.zip file or name it differently. Hopefully, that should work. Sorry for all the trouble.

Done.

Thanks! Please upload the attached file. Week_502.zip

Done.

Thanks! Testing is done. Everything seems to working fine again. Please try to see if we can get this hot-fixed soon.

~oseipokuw, please try this on the production server so I can tell CBIIT the ticket can be closed.

It worked on PROD with no errors. That was really quick. Thank you, Bob!

Looking at the logs from PROD some of the Spanish media docs were not linked but I am not sure why they weren't.

CDR44945 unable to find home for 'HER2/neu ' in CDR44945

CDR339339 unable to find home for 'herencia autosómica recesiva ' in CDR339339

CDR460127 unable to find home for 'judíos asquenazíes ' in CDR460127

CDR460203 unable to find home for 'fenotipo ' in CDR460203

CDR662000 unable to find home for 'ARN mensajero ' in CDR662000

CDR700147 unable to find home for 'cervicectomía uterina radical ' in CDR700147

CDR782188 unable to find home for 'Gleostine ' in CDR782188

| File Name | Posted | User |

|---|---|---|

| audio import user error.jpg | 2020-08-26 11:59:43 | Osei-Poku, William (NIH/NCI) [C] |

| Week_500_Rev1.zip | 2020-09-08 17:22:03 | Osei-Poku, William (NIH/NCI) [C] |

| Week_500.zip | 2020-09-08 13:46:34 | Osei-Poku, William (NIH/NCI) [C] |

| Week_501_Rev1.zip | 2020-09-11 10:25:52 | Osei-Poku, William (NIH/NCI) [C] |

| Week_501_Rev1.zip | 2020-09-10 11:13:49 | Osei-Poku, William (NIH/NCI) [C] |

| Week_501_Rev2.zip | 2020-09-10 13:16:04 | Osei-Poku, William (NIH/NCI) [C] |

| Week_501.zip | 2020-09-09 18:27:45 | Osei-Poku, William (NIH/NCI) [C] |

| Week_502.zip | 2020-09-14 17:37:57 | Osei-Poku, William (NIH/NCI) [C] |

Elapsed: 0:00:00.001969