EBMS Tickets

| Issue Number | 519 |

|---|---|

| Summary | [Reports] Literature Statistics Report |

| Created | 2019-08-15 10:10:48 |

| Issue Type | Improvement |

| Submitted By | Juthe, Robin (NIH/NCI) [E] |

| Assigned To | Kline, Bob (NIH/NCI) [C] |

| Status | Closed |

| Resolved | 2019-09-30 13:26:55 |

| Resolution | Fixed |

| Path | /home/bkline/backups/jira/oceebms/issue.248397 |

We periodically assemble some statistics about our literature surveillance to present to outside groups and/or to get a better handle on how we're doing with literature surveillance in terms of the number of responses from Board members and the relevance of the articles we are selecting and sending them to review. We would like to get some programmatic help pulling these numbers because it's very tedious and prone to error. We also have issues with comparing apples and oranges that the system may be able to help us with to some extent.

In general, these are the numbers we are interested in gathering (for a given time range, and optionally by Board and/or topic):

# of citations imported

# of citations rejected by NOT list

# of citations rejected by Librarians and approved by Librarians

# of citations published (unless this is identical to citations approved by librarians)

# of citations rejected by Board managers/other NCI staff and approved by Board managers/other NCI staff

# of citations for which full-text was retrieved

# of citations rejected by Board managers at the full-text state or approved at the full-text state or placed on hold at the full-text state

# of citations placed in a packet for review by Board members

# of citations receiving responses from Board members and citations that were added to a packet but did not receive a response

nature of the responses - yes (lumping together deserves citation, merits revision, and merits discussion) or no

# of citations received Editorial Board decision of text approved, cited, cited (legacy)

This always gets a little tricky because some of the numbers are captured on an article basis, while others are captured based on an article-topic combination. Ideally, we would also like to be able to report on percentages of articles that we review that get selected for full-text, sent to Board members, and ultimately cited in a summary, but fast track articles could enter the process at any point along that path and "muddy" the statistics.

I have a couple of ideas for how we might clear up some of the issues we have with comparing apples and oranges:

report statistics separately for citations imported in a batch job vs. those imported as a fast track. (maybe this is two different versions of the report)

when possible, have the ability to report statistics for the number of discrete citations overall, the number of citations by Board, and the number of citation-topic pairs for each category.

This will certainly need more discussion with you, Bob, but I wanted to get the main idea down here to initiate our discussion. I'll also post an example of some of the tables of statistics we've pulled in the past to give you a sense of how we report the data and what we're hoping to improve upon.

I wanted to add a couple of points from our discussion in yesterday's status meeting:

We can assume a YES status for all steps preceding the state at which a fast track citation was imported.

The ability to view statistics for fast tracked citations separately from citations imported in a batch is a nice to have.

~juther:

Let's nail down what "imported" means in this context.

First of all, I see "Citations retrieved: 53,013" and "Citations imported: 76,313." What does "retrieved" mean here?

Also, the "Import" page is used for assigning new boards/topics to an article which has already been imported into the system. The reporting for the results of an import job is careful in its statistics to use the label "imported" only to those articles which had not already been imported into the system, and it call the ones we already had "duplicate." Would I be correct in determining the counts for "imported" articles solely on a per-article basis? If not, what would be the value of counting an article as "imported" in August if it had already been imported in July and the import page was used to assign a second topic to the article, but not counting it as "imported" if the "full citation" page were used to do the same thing?

Good (and tough) questions 🙂

Victoria and I have struggled with the difference between citations retrieved and citations imported too and I don't have a good definition. Instead I will just explain what we want rather than try to make sense of what those confusing and imperfect numbers mean.

Imported: I agree with you that this will have to be captured on a per-article basis, and reflect the initial import of an article into the system.

Citation-Board-topic combinations: If possible, we'd like this number to reflect the event of associating an article with a Board and topic. To address your point above, this would include the addition of an article to a second topic on the Full Citation page and on the import page. It would also include citations that are associated with a Board and topic upon their initial import into the system. I think we'll need this large number to establish the denominator to compare with some of the later statistics.

Other questions on counting.

If an article is approved by the librarians for two different topics we'd count that as one article approval, right?

If an article gets approved by the librarians for one topic, and rejected for two other topics in the same reporting period, that's one article approved and one article rejected, right? (If we said the approval trumps the rejection, and we should only count the article as approved, then we'd have to justify the different results we get if either the rejection or the approval took place outside the reporting period. I suppose you could say a rejection gets ignored if the article has ever been approved for any other topic, but then the logic and the rules really start to get hairy.)

More questions to come, I'm sure.

We'd like to be able to get some of these statistics in multiple ways - i.e., on a per-article basis, on a per-Board basis, and on a per-topic basis. (That's what I meant by "optionally by Board and/or topic" above)

Your assumptions for #1 and #2 are correct if we're talking about the statistics on a per-article basis. If we want to drill things down by Board and/or topic, it gets a little more involved.

In #1, for example, the numbers might be 1 (per-article approval), 1 (per Board approval, if both topics were for the same Board), and 2 (per-topic approval).

You can see why we wanted some programmatic help with all of this. 🙂

> I think we'll need this large number to establish the denominator to compare with some of the later statistics.

I would be very cautious about how these numbers are characterized. Keep in mind that the events in the life cycle of an article happen at different times. So, for example, a board member abstract approval decision which occurs within the date range specified for this report may be for an article whose import occurred before the start of that date range. The use of the term "denominator" carries some implications which could easily be misconstrued. The tighter the granularity of the report (and we are talking about drilling down to the individual topics, with no restrictions on the narrowness of the date range for the report), the more misleading such characterizations would be. If you were to ask the software to perform calculations using a "denominator" derived from the numbers we're gathering, you could easily have the report blow up with a divide-by-zero exception. 🙂

I think the best we can do is to present the numbers as representing events which occurred during the time period for the report, making it clear that the numbers for each of those actions are not necessarily for the same set of articles (or boards, or topics). We can say that the numbers convey a general impression of trends but avoid trying to say things like "x.y% of the articles imported were approved following a review of the articles' abstracts."

Make sense?

> We can assume a YES status for all steps preceding the state at which a fast track citation was imported.

Same approach if an article is imported without using the "fast-track" option, and a board manager adds a decision directly on the "full-citation" page, skipping intermediate states? I'm guessing that the answer is "yes" for this question, though it makes one wonder about the value of separate "fast-track" statistics, since this approach of jumping the queue on the "full citation" page accomplishes the same result, but without falling under the "fast-track" rubric.

Yes, this all makes sense. I know the numbers aren't perfect, and we don't expect the numbers in each category to represent the same set of articles, but we do hope to use the statistics to show how we whittle down the articles for review and again for inclusion in the summaries. If we calculate any percentages (not expecting the report to do this), we fully recognize that there are several limitations and they give us a rough approximation only.

Yes, I agree with you.

Good point about the full citation page being another way to "fast track" an article. Gosh, this is complicated. I think you're right that we can hold off on providing separate statistics for the fast tracked articles for the time being. Save that for a future release.

For board member responses which say "no way" or "yes, please" I can determine easily when the response came in. For the column representing articles in packets which "did not receive a response" I assume that I should count an article if it is put in a packet at or after the start of the date range for the report, ignoring the end of the date range (since the answer to the question "when did the board members not respond?" is "continuously, from the time the review was assigned until now," right?

Capturing the decision we made in your office yesterday afternoon, ~juther, to drop the logic to imply earlier decisions for fast-track articles or for articles for which a decision was applied on the "full citation" page, skipping intermediate steps, as the software to implement this filling-in-the-gaps approach would have been much more convoluted, error-prone, and harder to maintain and enhance than the more straightforward approach of counting decisions which were explicitly recorded. This also gives us back the capturing of the differences between fast-tracked articles and standard-path articles, and also reflects more accurately the actual work performed by the organization.

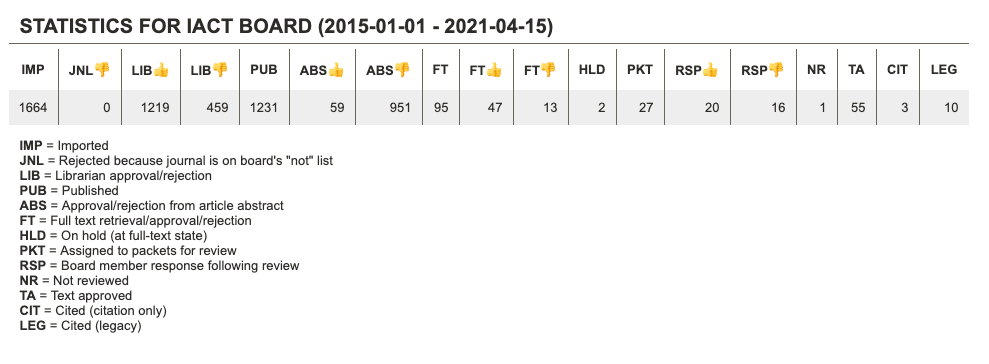

Capturing the HTML version I have implemented so you can appreciate the header icons before I switch over to generating an Excel report, since that will make it easier (or indeed, possible) to generate more complete, readable tables, as well as make it easier for you to grab the numbers.

I love it!!

Implemented on DEV.

Right, your assumption sounds good to me.

This looks great, Bob. We'll review it more closely but I think this will be so helpful and I like the way you've organized it. Thank you!!!

🙂

Meant this to go somewhere else, but Jira won't let me delete or move the comment. 🙁

🙂

We reviewed this one in our meeting as well and everyone is really excited about having/using these statistics. We have a few questions/requests:

We were a little confused by the difference in numbers between articles that were approved at the abstract state and for which full text was retrieved. The full text numbers are almost double. We'd expect some more full-text retrievals due to fast tracks and other projects but this is a larger difference than we were expecting. Could you explain how those numbers were gathered? (It would actually be helpful to discuss the logic for each of these fields just to be sure we understand how the numbers are being pulled - we'll want to document this so we can refer back to it when questions arise.)

Would it be possible to freeze the left column on all of the sheets?

Please change the column headings "Initial Approval" and "Initial Rejection" to "Librarian Approval" and "Librarian Rejection".

Please change the column headings "Approved from Abstract" and "Rejected From Abstract" to "Abstract Approval" and "Abstract Rejection" - same for "Approved/Rejected From Full Text" to "Full Text Approval/Rejection" (this is just to save a little space).

Please move the "All Boards" row in Sheet 1 and the "All Topics" rows in the remaining sheets to the top of the spreadsheet and add a blank line beneath it before going into the results by Board/topic. (Rationale: this is likely the number Margaret will use most often and it is also less likely to be misconstrued as a total if it's moved to the top.)

Would it be possible to add a second header spanning the three Board member response columns to say "Board Member Responses"?

Thank you!

Report Logic

Here is the logic driving how the numbers are derived for the columns.

Imported

For this column, you had asked that we treat this as the answer to one of the following questions (depending on whether we're gathering per-board or per-topic) statistics:

How many articles were imported during this date range?

For how many articles was a state first assigned for one of this board's topics during this period?

For how many articles was a state first assigned for this topic during this period?

So the software first creates a virtual table, showing the date of the first state assigned for each article (narrowed by board or topic if appropriate). Then it constructs a second query to find out how many of those "first state dates" fall within the date range for the report.

Per-state counts

For most of the columns in the report (six columns preceding the Full Text Retrieved column, and three more following that column we are finding all the rows in the article state table for a specific state, narrowed by board or topic as appropriate, where the state was assigned during the report's date range.

Full Text Uploaded

For this column, we look for articles whose full text PDF was uploaded during the report's date range (and, if we're narrowing by board or topic, for which we have at least one row in the state table for that board or topic).

Assigned for Review

This column looks at how many articles are in packets which were created during the report's date range (again, narrowed as appropriate by topic or board).

Reviewer Responses

These three columns count distinct articles linked to board member

reviews for specific dispositions (narrowed as appropriate, etc.). The

first of these columns looks for any disposition which is not "No

changes warranted" and the next column looks for that specific

disposition. The last of these columns counts articles in packets for

which there are no rows in the ebms_article_review

table.

Board Decisions

The remaining columns look for each of the available decisions found

in the ebms_article_board_decision_value table, the

software looks for articles having board decision states (narrowed blah

blah blah) linked to that decision.

Additional enhancements

All of the new enhancements requested were implemented except for the new column-spanning header, which is non-trivial enough to go into a subsequent release.

All of the new enhancements requested were implemented except ...

I was curious that you only wanted the left column frozen, but not the header rows. I would have thought, at least for boards like Victoria's, that if we're freezing anything we'd want to do both.

Let's look at some specific numbers that you're thinking look suspicious. As you know, it's difficult to assess correctness because of the ways in which the numbers are not comparable from one column or row to another, as discussed through this ticket.

Regarding the comment about the frozen header... you are correct, it would be a good idea to freeze the header row, too. We were reviewing the report with all the Boards and only a few rows when we made most of our observations so it wasn't an issue. Thanks for catching that!

Done.

Hi Bob, as we discussed in the status meeting, could you please change the default date range for this report to be one year (rather than three years)? Thanks.

Date range adjusted on DEV and QA.

Looks good -thanks!

Verified on QA.

| File Name | Posted | User |

|---|---|---|

| EBMS stats for JIRA.pptx | 2019-08-22 10:29:11 | Juthe, Robin (NIH/NCI) [E] |

| image-2019-08-28-04-41-08-475.png | 2019-08-28 04:41:39 | Kline, Bob (NIH/NCI) [C] |

Elapsed: 0:00:00.000313