CDR Tickets

| Issue Number | 4769 |

|---|---|

| Summary | [Internal] Log reader fails with utf-8 decoding failure |

| Created | 2020-01-31 17:02:36 |

| Issue Type | Bug |

| Submitted By | Kline, Bob (NIH/NCI) [C] |

| Assigned To | Kline, Bob (NIH/NCI) [C] |

| Status | Closed |

| Resolved | 2020-04-20 16:17:37 |

| Resolution | Fixed |

| Path | /home/bkline/backups/jira/ocecdr/issue.256033 |

Example error message:

d:\cdr\Log\session-2020-01-31.log: 'utf-8' codec can't decode byte 0xe1 in position 6528: invalid continuation byte

~volker: The problem turns

out to be that on Windows (wouldn't you know?) the default encoding for

the Python logging.FileHandler class is latin-1. I can

think of four possible solutions:

Explicitly set the handler encoding to utf-8 in both places (cdr.py and settings.py) where we have logger factories.

Change the encoding used by log-tail.py's file-reading code to latin-1

Add a loop to the log-tail.py script so we try the utf-8 encoding, and if that fails we fall back on latin-1.

Combination of #1 and #3.

Drawbacks of the current situation:

some logs can't be read by log-tail.py

some log entries silently (sort of) fall on the floor, not ending up in the log at all

Drawbacks of solution #1:

we'll end up with some mixed logs whose earlier entries were written as latin-1 followed by lines which are encoded as utf-8

until hoover archives off the latin-1 lines, those files will still be unreadable by log-tail.py (I imaging that for some log files, hoover will never retire the latin-1 lines)

it's possible (but extremely unlikely) that there's some code somewhere which depends on the logs being latin-1-encoded

Drawbacks of solution #2:

we're stuck with a parochial encoding

we continue to lose log entries

any log entries written by custom loggers (not created by one of our factory methods) using utf-8 will be readable but garbled

Drawbacks of solution #3:

we continue to lose log entries

we're still stuck with a limiting encoding

we have the same mongrel files waiting for hoover cleanup (or Godot)

Drawbacks for solution #4:

as long as we have mixed-encoding files UTF-8 records will be garbled on output (and there will be more of those until hoover archives off most of the latin-1 entries)

possible (but very unlikely) breakage of code expecting latin-1 in the logs

My vote is for #4. I would prefer to have the log files written in the common and complete encoding used by most of the civilized world, and I can live with some garbled utf-8 on a temporary basis to get there. If there's any code which depends on the logs being latin-1, I'd rather fix that code that live with an obsolete encoding.

I will hold off on proceeding with the ticket until you weigh in with your vote. Tell me what you think and assign the ticket back to me.

A footnote: at least part of the reason I didn't notice this problem earlier is that much of the work done on testing the new logging functionality, introduced when we eliminated the C++ server, was tested on my GFE laptop, where the default Python encoding is the more sensible and useful utf-8. 🙂

There's no reason why we would have to wait forever until Hoover kicks in. We should be able to release the Kraken (a.k.a. Hoover), squirrel away those older log entries causing the problem, and start fresh for those logs.

You make a compelling case for option #4. Who wants to be viewed as part of the uncivilized world? 🙂

Sounds good. Logging nirvana, here we come.

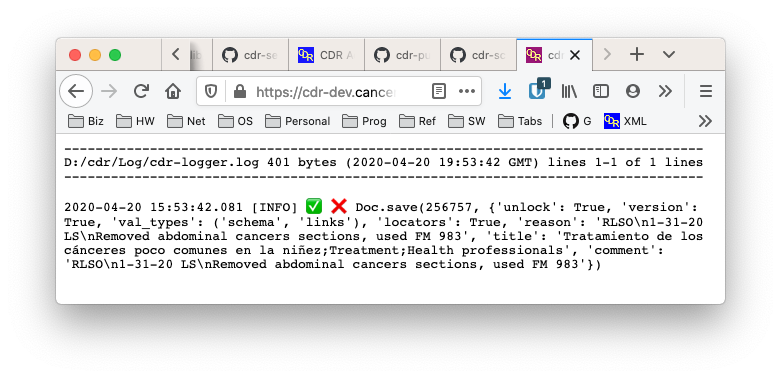

Installed on DEV. I added a bonus: you can now add an

encoding parameter to the URL to force the use of a

specific encoding. Don't expect it to be useful very often, but it's

there in case we need it for an Russian logs you pick up.

Log reader tested on PROD. Closing ticket.

| File Name | Posted | User |

|---|---|---|

| image-2020-04-20-15-57-39-121.png | 2020-04-20 15:57:39 | Kline, Bob (NIH/NCI) [C] |

Elapsed: 0:00:00.001467